How Much is the Open Source Ecosystem “Worth?” ⚖️💰📏

Well, let’s talk Python to start

(If you’re honest, Python 2.x and 3.x are practically different languages, but we’ll group them to keep it easy.)

When we parse these files, we do more than just calculate a hash. In addition to running common static analysis and QA tools, we also build an AST for every file and run proprietary models for risk, compliance, and valuation.

Included in this pipeline are the Halstead metrics. If you’re into formal software engineering, you’ve probably heard of them; if you’re not familiar with them, you can think of them as a way of estimating the “cost” of software, like an insurance RCV for a house.

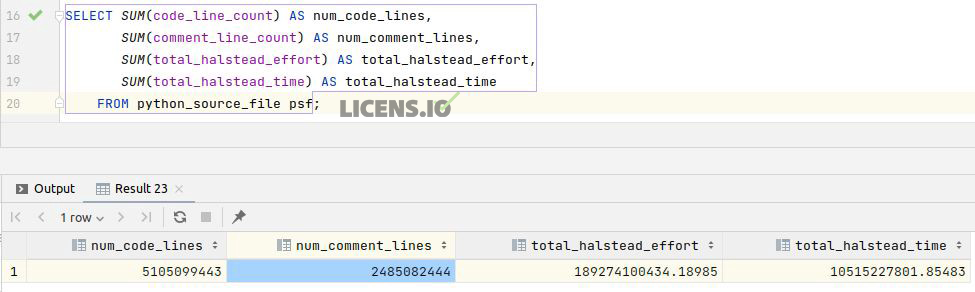

If you sum up the Halstead time for every file, you get a ridiculous number. In some sense, there’s no debating that it’s an upper bound. Many of the unique files really represent incremental changes to the same underlying ideas or might even be auto-generated by tooling like SWIG or cffi.

But on the other hand, Halstead metrics are generally accepted to underestimate design, dev, and test holistically. That’s part of why COCOMO exists. So, call it even…

If you take the number at face value, Halstead estimates 10.5 billion person-hours in PyPI alone. From a pure volume perspective, there are 5B lines of code and nearly 2.5B lines of comments. All of these excludes “real” documentation, like the Markdown or HTML that go with them.

10.5B billion person-hours is a lot. At even $10/hour, we’re talking over $100B. At a more reasonable rate, your eyes start to water. When you throw in conda and GitHub, you’re easily at $1T (depending on how you count forks).

Yes, there are many reasons why these figures overestimate the cost of replacement. But no matter how you adjust assumptions, the conclusion is the same: the Python ecosystem alone would be in the top quartile of country GDPs.

And remember — this is just the cost of replacement.

The actual value *created by using* this software, both as an intermediate good for other software and as part of other economic activities, is orders of magnitude greater. Packages like numpy, scipy, matplotlib, sklearn, pytorch,and tensorflow alone have supported so much research over the last decade.

And as we look at the democratization of areas like data science and machine learning, value-creating activities using these open source assets will likely grow by another order of magnitude…

At the end of the day, the exact number doesn’t matter. It’s clear that, as a society, we need to get more serious about sustainable, secure open source.

Let’s do it.