Snake JARs, Part III:

Data Science

Sssssss-security

In this series, we’ve been talking about cross-language dependencies — in particular, Python packages vendoring Java JARs. While these issues come up in many different ways and other analyses can provide different perspectives, we’ve started by highlighting how log4j and other common Java packages can end up in PyPI or Conda.

In this post, we’ll be looking at patterns or trends in this data to help organizations understand where these risks might emerge — and how to proactively avoid or address them. To do so, it helps to ask a simple question:

- What types of packages most frequently vendor Java dependencies?

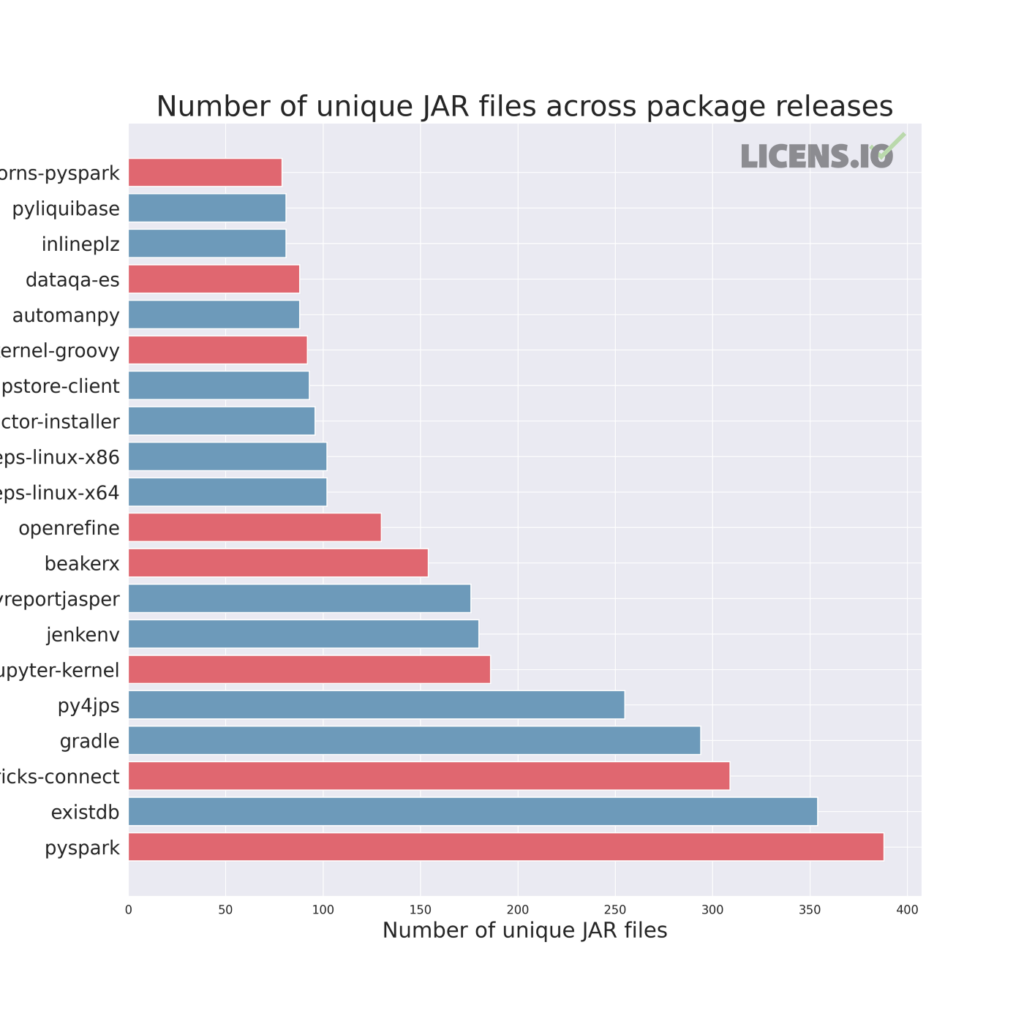

While the figure below isn’t a conclusive answer to the question, it does gesture strongly towards two primary use cases that we’ve encountered: CI/CD and data science. Today, we’re going to focus on data science use cases, which are plotted in red below.

Data science, analytics, machine learning, AI, BI, reporting — no matter what your organization might call these resources or the organizational unit they reside in — is frequently treated uniquely. Because they are often technical users who require access to data sources and flexibility, they are typically provided with access to a wide range of systems, including self-service cloud infrastructure and internal databases, warehouses, lakes, etc.

However, while many data scientists or machine learning researchers can program, they are not typically trained as developers. More importantly, they are typically outside the scope of SDLC processes used for production software. In general, data scientists care about pain points related to accessing, combining, and processing data — reliability and security are not primary concerns. As a result of these common control gaps and day-to-day incentives, data science groups are frequently the source of high-impact risks.

Take, for example, Apache Spark and its relative Databricks, which account for three of the twenty top packages by unique JAR count in our figure above. Both Spark and Databricks typically expose a number of external services, including web-based administration and development interfaces, traditional RPC endpoints, and modern APIs. Because these platforms are typically designed to support aggregation of multiple internal data sources, these externally-facing services are ripe for use to access and exfiltrate sensitive information.

Similarly, Jupyter, successor to the IPython project, and the related BeakerX project, are two of the most popular environments for data science development. Within these environments, users can execute arbitrary commands via web-based terminals or write scripts in Python, Julia, R, Groovy, Scala, Clojure, Kotlin, Java, and SQL, to name a few.

Since these environments almost always have direct access to internal data sources, it’s a threat actor’s dream. Who needs to package up and deliver shellcode when it’s as easy as New > Terminal in Jupyter? And when most data science environments allow direct access to unredacted and unencrypted data stores, there’s no need to discover and circumvent traditional network protections. Clearly, these environments can result in dangerously-fertile ground for the wrong people…

In our experience, data science environments require flexibility that does not align with traditional SDLC compliance approaches. As a result, increased focus on education and active monitoring are frequently more effective at reducing risk while managing business impact and expectation.

The solution, in reality, is simple: actively engage with your data science and machine learning teams to make sure they are aware of and care about the risks. Work with them to develop policies and procedures that reflect the organization’s goals and the team’s capabilities and knowledge — but don’t accumulate risk unknowingly and uncaringly.