Surveying Scikit-learn Usage — Part I

In the world of machine learning and data science, few packages are better known than scikit-learn. Its publication in JMLR has over 52,000 citations.¹ And if you were working in machine learning before sklearn first really hit the scene back around 2011, you’ll appreciate why.

Back in the 2000s, most of us poor souls were running our research in environments like Matlab, Orange, or R. If you used Matlab, you were typically caught up in toolbox licensing headaches or struggled to share code with colleagues trying to avoid the cost with GNU Octave.

And if you used Orange, well…let’s just say that Java earned its reputation for heap allocation cost and memory usage. Back when DDR2 was new, you’d spend a pretty penny to get a x86 up to 16GB — and still wonder where it all went when you tried to train your models.

…R? Well, I’m going to save my opinions about R for another post

I remember first coming across the universe of scipy toolkits — referred to shorthand as scikits. My first experiences were with the image and time series packages, scikit-image and scikits.timeseries. It was around 2010, and I was finally making the jump to move all of my old Matlab code over to the numpy, scipy, and scikits.* universe.

If you’re curious, this was still before pandas was really usable, which meant that all of your feature engineering was still done in numpy and scipy.

I don’t recall exactly which project drove me to it. It probably had to do with USPTO dockets or Treasury auctions, where I think I was using SVMs at the time. Tired of using LibSVM or LibLinear directly, I found a young Python project that looked to provide pretty good support for the underlying C and Fortran procedures…and, well, the rest is history, personally.

Since then, I can’t imagine more than a week has gone by without using sklearn. Though the leading edge of academic interest has since moved on to the world of deep nets, I still have hundreds of models and clients that will probably continue to run sklearn for a decade to come. Somehow, I even managed to find time to put in 17 PRs and issues over the years.

Last week, we took a similarly nostalgic look at IPython — but in addition to my walk down memory lane, we also looked at the impact of IPython empirically on the ecosystem. We’ll do the same thing this week for scikit-learn, broken up into a few discrete chunks.

To start, let’s look at who adopted scikit-learn in the community. As usual, we’ll be using our licens.io platform, which resolves and normalizes all of these references.

First, an illuminating anecdote. You might wonder — which PyPI package was the first to depend on sklearn? Those of you who have worked with the team might guess one of the INRIA packages lead by Alexandre Gramfort or Gaël Varoquaux…but the answer, as far as our data is concerned, is actually the paddle library, which implemented the Proximal Algorithm for Dual Dictionaries Learning FN (PADDLE).

While paddle is no longer under active development, there were three releases on PyPI — 1.0.0, 1.0.1, and 1.0.2. And in release 1.0.2, paddle added an MNIST test using three scikits.learn references:

First use of sklearn in PyPI — paddle 1.0.2

Now, most analyses would miss paddle. The distribution didn’t contain a requirements.txt file. Its setuptools parameters in setup.py also didn’t specify any dependencies whatsoever, let alone scikits-learn.

Thankfully, our technology diligence platforms at licens.io perform deep inspection of every file. Trusting surface-level, self-reported metadata is a recipe for trouble.

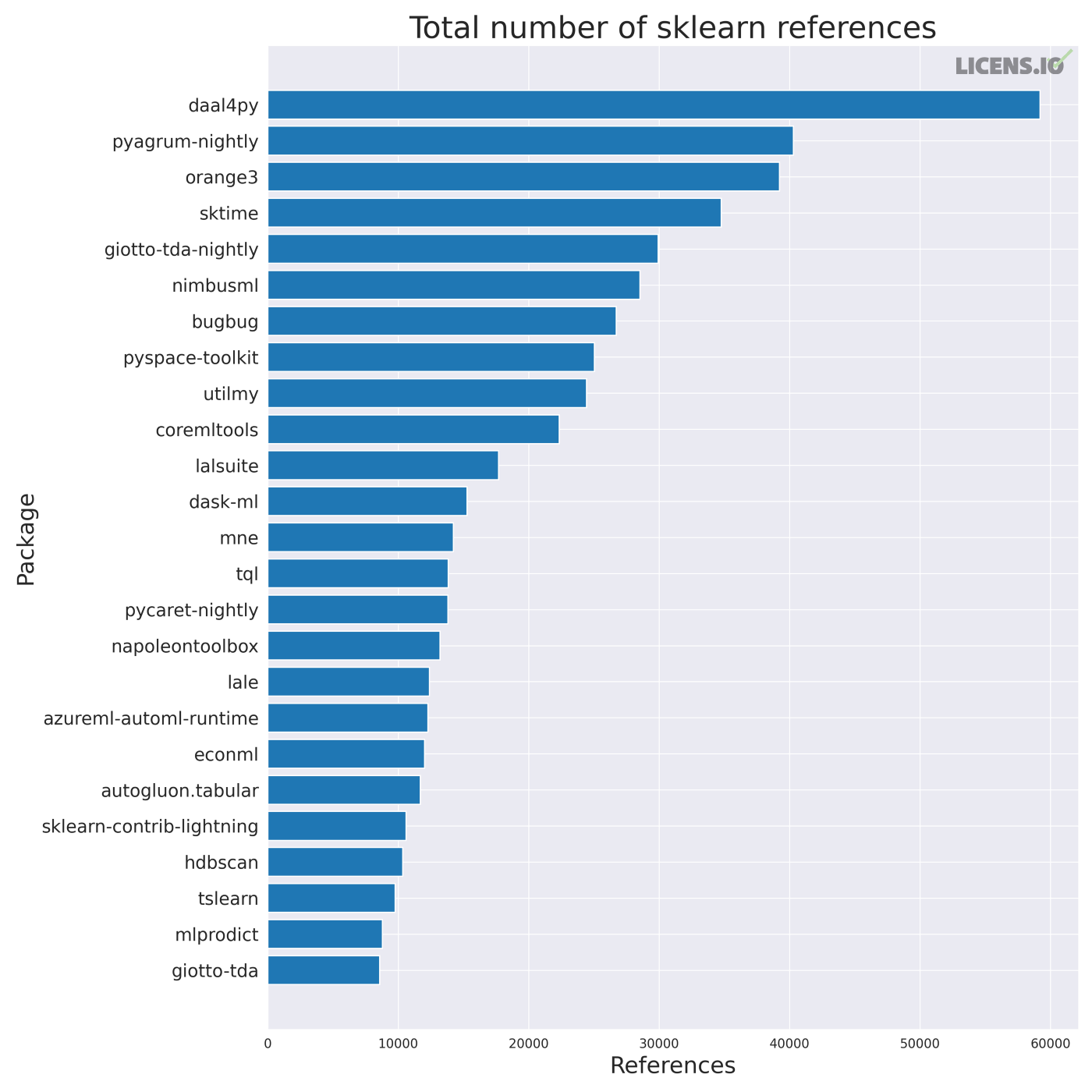

paddle was the first package — but definitely not the last. While there are many ways to count which packages have used scikit-learn the most over the years, the figure below documents the simplest way to look at the world — the total number of class imports across all releases in PyPI and Conda.

The results probably won’t surprise you, as these packages are the most well-known wrappers, accelerators, or interfaces in the scikit-learn ecosystem. Ironically, Orange3 — the ML framework I was using 20 years ago — is now one of the largest scikit-learn users under this measurement.

On the next post in this series, we’ll start to dig deeper into how this adoption occurred — and, more interestingly, which supervised and unsupervised methods have been most popular. Whether you’re rooting for ExtraTreesClassifier or RandomForestClassifier, we’ve got the results for you.

PS: Is there another package you’d like us to analyze or write about? Let us know!

[FN1]: According to Google Scholar, which admittedly may over- or under-count such references.