Four Reasons Why SCA Isn’t Solving Your Supply Chain Security Issues

Dynamic Dependencies

The first pitfall is also the most common: dynamic dependencies. These dependencies are loaded based on runtime behavior. Dynamic dependencies come in different flavors: environment dependencies, internal logic dependencies, and external data source dependencies.

Environment Dependencies

Sometimes, they’re imports of packages that already exist in an interpreter or environment, like through php.ini or Python’s PYTHONSTARTUP environment variable. In other cases, they’re imports using built-in language functionality. In this case, static code analysis tools typically omit these findings. While some software composition analysis tools will find these by inspecting the entire execution environment, most will also omit such dependencies unless manually specified by the software developer. Furthermore, those SCA tools that do include the entire environment often run the risk of producing false positive results, creating fatigue for reviewers.

For example, Java applications that use ClassLoader or Python applications that use importlib to handle compatibility across different operating systems often fall into this category.

Internal Logic Dependencies

The second flavor of dynamic dependencies is more tricky. In this case, an application will dynamically install a package based on some internal logic like a requirements file or list of strings. This dynamic installation can come in many forms: source control checkouts like git clone, downloads via curl, or executing package management commands like pip or npm. In these cases, it can be very difficult for any automated tools to identify these packages.

Examples of this flavor include many larger applications that distribute “deploy scripts” or use packages that span different package managers or build systems.

External Data Source Dependencies

In the third and most extreme flavor, the dynamic dependencies to install are determined from an external data source, not internal logic. For example, the application might query an external API or request keyboard input from the user. In cases like these, it is practically impossible for static code analysis tools to identify these dependencies, and very few software composition tools will identify such dependencies either unless run on “live” applications.

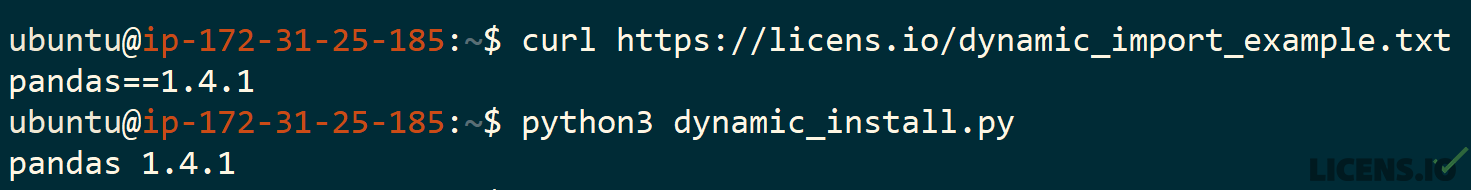

Below is a simple Python example to give you an idea of how simple but problematic this pattern is. At first glance, this script seems to only use importlib, subprocess, and requests. If you ask most SCA tools, that’s what they’ll tell you too.

But in reality, an application containing code like this will install and import any packages defined in the requested URL, as you can see below. Now imagine that the URL is only accessible via an authenticated API or internal network resource, and you can see how it can be truly impossible to answer the question through static analysis or most software composition approaches.

Cross-Language Dependencies

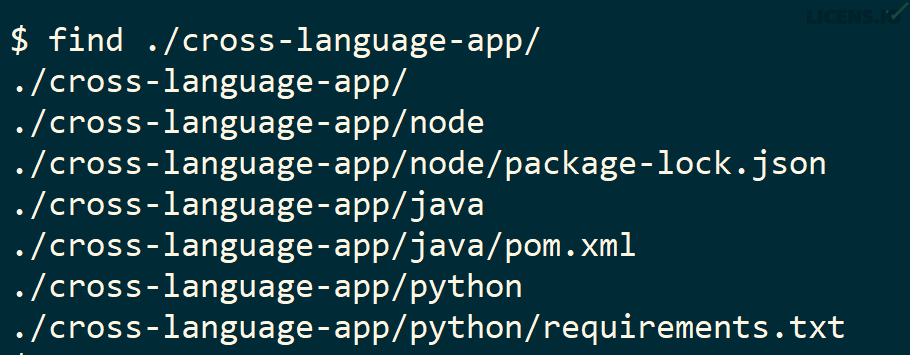

The next most common issue for software composition and static analysis tools is cross-language dependencies. This issue occurs when an application or component distributes libraries from multiple languages to provide functionality. In many ways, all high-level languages are already cross-language, as they rely on lower-level or “system” dependencies. For example, many languages like Python or Node/JS use libraries like OpenSSL, libxml2, or zlib in their core implementations or libraries. These libraries are almost always low-level implementations in C or C++, some of which tie into POSIX or Windows operating system APIs.

However, many applications – including those written in lower-level or self-contained languages like Rust or Java – can still create high-level cross-language dependencies. For example, many larger applications often combine smaller components through service-oriented architectures, like Python applications that use Java or Node microservices to perform specific functionality like converting Open Office documents or rendering web page previews. We looked at how such cross-language imports occurred in the Python and R ecosystems during the log4j attack previously.

In cases like these, static code analysis tools can sometimes capture these dependencies – but only when package maintainers are conscious of these complexities and actively aggregate them for final distribution. In some cases, the “final distribution” layer – typically a package or application manager – may not even allow them to include results from outside of that “closed garden.” In these cases, downstream automated tools will never receive completion information from such metadata.

Many software composition analysis tools do fare better for cross-language dependencies than they do for dynamic dependencies. As long as the components follow standard file naming schemes – like .jar, .so, or package-lock.json – these frameworks can identify them within a larger application.

APIs and Network Services

Like the broader world, software development also experiences trends and cycles, and one notable cycle has been between localized vs. remote or distributed designs. Over the last 40 years, software has moved from localized to distributed to localized to distributed architectures. Sometimes, we’ve called these technologies “remote procedure call” or RPC; other times, we referred to them as web services or application programming interfaces (APIs).

Regardless of what acronym is used, the reality is that many software applications or components “offload” logic or data access to software components that do not “co-exist” in the same environment or application. In some cases, access to these network services or APIs occurs through libraries or software components that are clearly associated. For example, most access to Amazon Web Services, Azure, or Google Compute relies on their open source libraries.

However, many applications access network services or APIs through generic protocol-specific libraries. Given that most network services today are provided over HTTP(S) using simple RESTful APIs, there is often little need for a full-fledged API library. As a result, key logic or data access occurs through simple HTTP requests and JSON parsing, and no software composition analysis tools identify these patterns.

Code Repackaging or Reuse

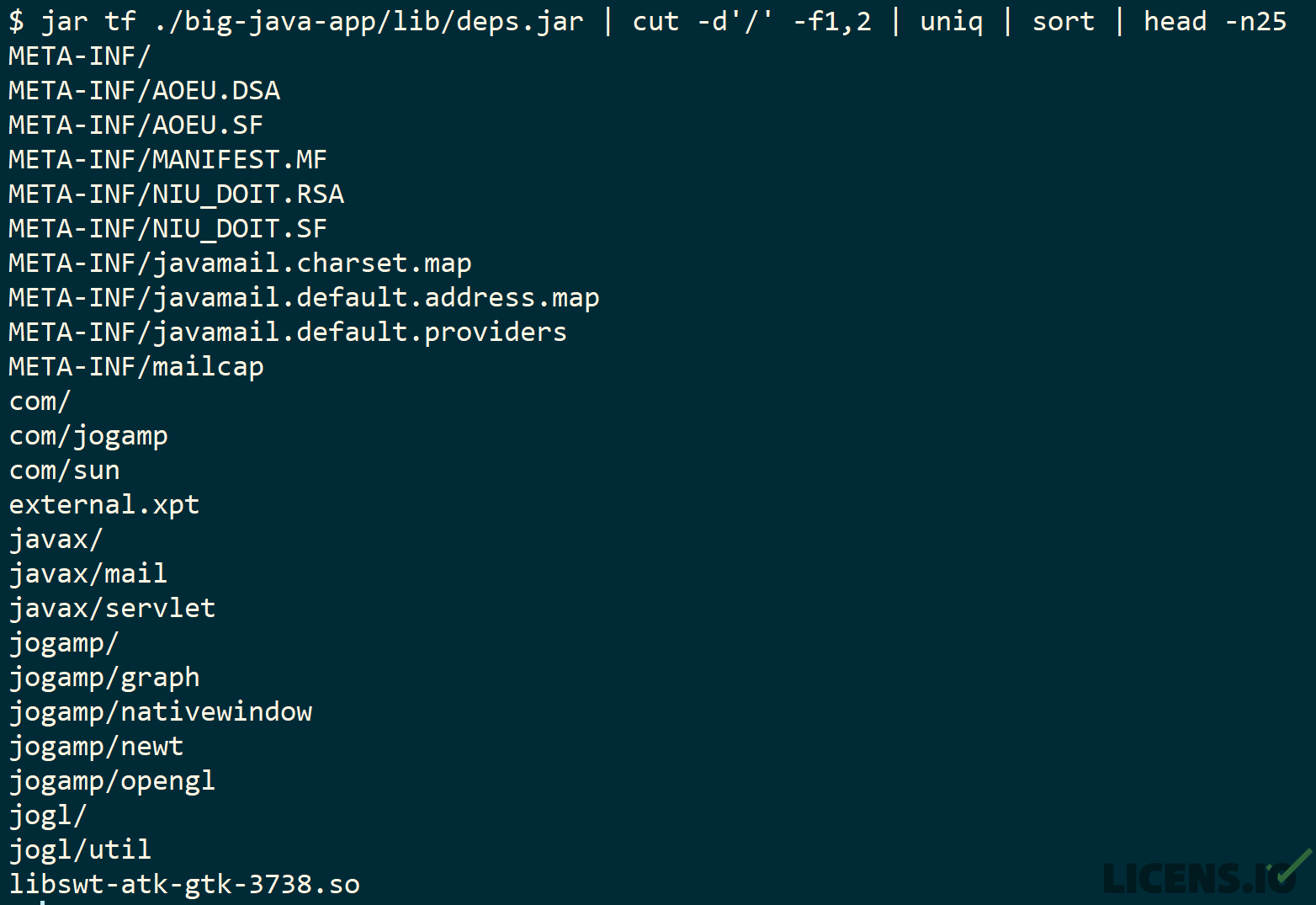

When these components are repackaged or renamed, such as when JAR files are combined into “fat” JARs, “SuperJARs,” or “UberJARs,” then many SCA tools begin to struggle. Such repackaging or renaming requires a “deeper” inspection of all objects in source control or filesystem.

The example below is a “fat” JAR that includes not only multiple Java libraries, but also a number of shared C libraries (.so) as well.

Many SCA tools avoid reading and analyzing the complete contents of all files or objects, because doing so is an I/O-intensive design that can substantially slow analysis. Even worse, for vendors pursuing SaaS business models, such designs can dramatically increase costs. In order to avoid reading and parsing all files, these tools instead check for patterns in file names or headers. If a file or object does not match at least one inclusion criterion, then it is typically ignored for purposes of analysis.

In other cases, package maintainers may vendor other open source or closed source packages in their releases. There are many legitimate reasons for this practice, despite the licensing and maintainability risks; for example, sometimes these packages are forked or modified in ways that improve reliability or functionality. However, regardless of the reason, confusion can arise. Notably, when patches for reliability or security issues are released by the original package owner, the vendoring package maintainer must “release” their package to incorporate these changes – even when they have not made any updates or changes themselves! And when these packages are installed into a single environment or interpreter, situations can arise where two versions of the same package can conflict. This can confuse SCA tools as well, which are often not capable of reporting multiple versions of the same library within the same findings.

Last, and most difficult to detect, is the reuse of portions of code from open or closed source projects. While tracking wholesale copying or redistribution of files or archives is possible through matching hashes, the analysis of code reuse typically requires methods that are far more sophisticated, costly, and less accurate. For example, if an author were to have reused a function or class from a vulnerable OpenSSL or log4j package, then detecting these downstream vulnerabilities would require analysis at the function or line-of-code level. Static code analysis can often provide a great start to identifying these issues, some degree of ad hoc analysis is typically required to confirm these findings. Out of the box, software composition tools simply are not designed for tasks like these.

But What About SBOMs and OpenChain?

SBOMs and OpenChain (ISO 5230) reflect a vision of data-driven, machine-readable software management. In a world where these reporting artifacts are complete, accurate, and adopted by all software creators and distributors, then many of the issues currently addressed by software composition will be made obsolete. As we discussed in our post about the premise, promise, and perils of SBOMs, there are some mountains to climb yet before this dream is realized, and we think “the old way” will sadly continue to be reality for many years to come.

But even in a world where all software producers agree to create and share SBOMs, there will always be omissions. Some of these omissions may be the result of negligent processes or honest mistakes, but in other cases – like the dynamic, externally-defined import example above – even the most rigorous SDLC processes cannot know the unknowable or predict the future.

In many ways, this dilemma exposes the peril of applications or libraries that utilize such dynamic loading patterns. In many cases, these designs could be replaced with more cumbersome but safer approaches. But in reality, some components either have a genuine need for such behavior or the maintainers will not be convinced to change their design at this point. As a result, organizations should continue to be on the lookout for packages like these; any environments they inhabit will almost certainly require additional, manual review or alternative tooling for the foreseeable future.

SBOMs also do not cover “data components,” APIs, or other network services, as discussed above. As a result, when data components drive software behavior or applications rely heavily on remote logic or data, there are likely to be material omissions.

Closing the Gap Today

Dynamic dependencies, cross-language dependencies, and code repackaging and reuse can all create serious issues for software composition and static code analysis tools. Applications and components that feature such patterns can create dangerous gaps in your automated coverage – the last thing you want if you rely solely on these tools.

As we discussed above, some of these patterns – like an application that dynamically loads a dependency based on user input – simply can’t be known in advance. No static analysis technique can predict what letters a user will type in advance. But does this mean that all hope is lost?

In some cases, we can address these issues without resorting to wizardry, but the answer, as usual, involves trade-offs. In the case of many dynamically-loaded dependencies, there are two options that often help capture previously-omitted results: emulation and runtime or dynamic analysis.

Cross-language dependencies are luckily easier to address in most circumstances. Frequently, multiple static analysis frameworks can be combined to produce higher-quality results – and many software composition tools do a decent job out-of-the-box, assuming package specification files or metadata follow common standards.

APIs and network services can be identified through multiple approaches. The most common and simplest approach relies on using known domains, endpoints, URLs, or request formats to identify commonly-utilized APIs. By inventorying such APIs and related metadata, SCA tools can provide useful information about not just which APIs are being used, but what type of information or processing is likely to be occurring.

Code repackaging or reuse is unfortunately often the most difficult to detect. While exact copies of files are easy to detect through filename and hash matching, source code is often copied in much more limited ways. For example, methods like simple encryption, compression, hashing, or argument parsing are often “borrowed” piecemeal from larger files. These standalone classes or functions are then copied into other larger files in the “borrowing” library. As a result, the “original” source file and the “new” source file are not identical, and hash matching at the file level will not identify such reuse.

The best approaches to identifying reuse involve static code analysis, which can detect potential duplicates by comparing the function definitions, class designs, syntax trees, or bytecode of packages. However, the general case of such comparisons involves maintaining very large data stores for lookup, which can require either very large amounts of RAM or long parsing times for large projects or environments.

At the end of the day, the best approach is an unsurprising one. Organizations do best when they layer multiple analysis approaches, such as by using multiple source composition or static code analysis tools. Like in machine learning, ensembles of models often do better than any single model. And, as always, there’s no substitute for a healthy dose of human review.

At Licens.io, we combine expert services with a data-driven platform for risk management, valuation, and information security. Leveraging over 30TB of data on the open source supply chain and our proprietary Diligencer platform for software audit and valuation, we deliver efficient, high-quality results for organizations.