Surveying scikit-learn Usage — Part III: Clustering and Overall Popularity

As part of our ongoing focus on the Python open source ecosystem, we’ve been publishing a series on the use of scikit-learn. In our first post, we looked at the history of scikit-learn from a 10,000-foot perspective. In our second post, we then looked how tree-based ensemble methods, best exemplified by random forests, have been the most popular class of classifiers and regressors.

On today’s post, we’ll shift our focus to clustering and overall use case popularity.

Clustering

First, we’ll look specifically at sklearn’s clustering package. As always, all data comes from our licens.io software supply chain data products.

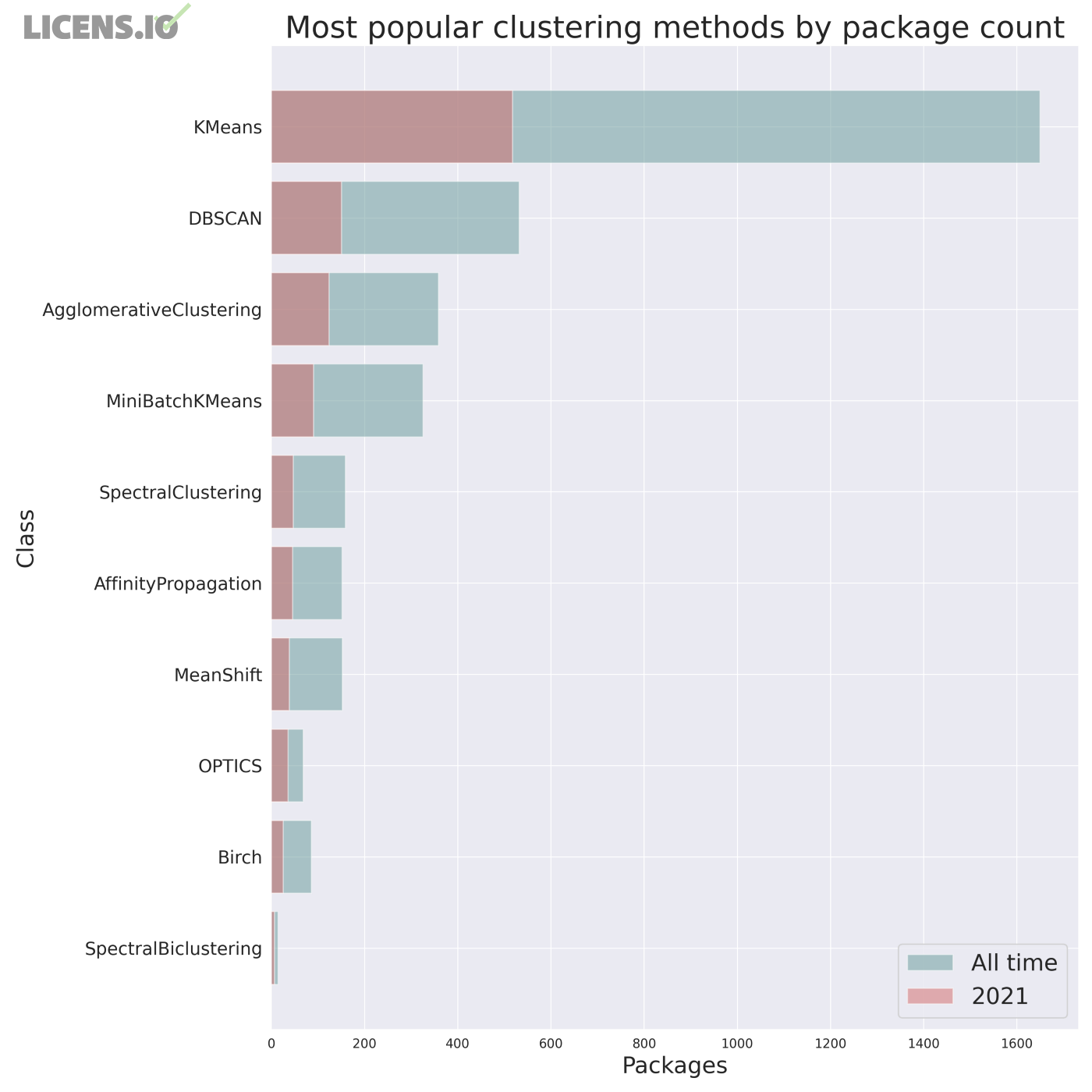

The figure below shows the relative popularity — both recently and over all time — for the methods in sklearn.cluster.

Most popular clustering methods in sklearn by number of unique packages using; 2021 and all time

If you polled a room full of data scientists about what they thought were the most popular clustering methods, K-means and hierarchical clustering would almost certainly top the list. And unsurprisingly, KMeans and AgglomerativeClustering are #1 and #3 on the list.

KMeans is clearly dominant in popularity, especially when you include the MiniBatchKMeans implementation. In fact, KMeans alone is more popular than all the other clustering methods put together.

Notably, both of these methods “suffer” from the requirement to set n_clusters — the number of unique clusters or memberships to assign to the records. While there are many contexts where the number of clusters may be known or inferred through silhouette/elbow/scree plots, there are also many cases where the user may not have any prior for this parameter.

DBSCAN — the #2 method on our list — is most likely so popular because it does not require n_clusters to be set explicitly.

To be honest, I don’t use K-Means much for a variety of reasons; when I do, I tend to use the MiniBatch implementation. Always averse to my priors “tainting” results or in-sample hyperparameter optimization, I default to using DBSCANor OPTICS unless there’s a good reason not to.

Overall Use

We’ve looked at clustering, classification, and regression, where KMeans and Random Forests have come out on top overall. However, scikit-learn provides many methods and classes other than these supervised and unsupervised techniques.

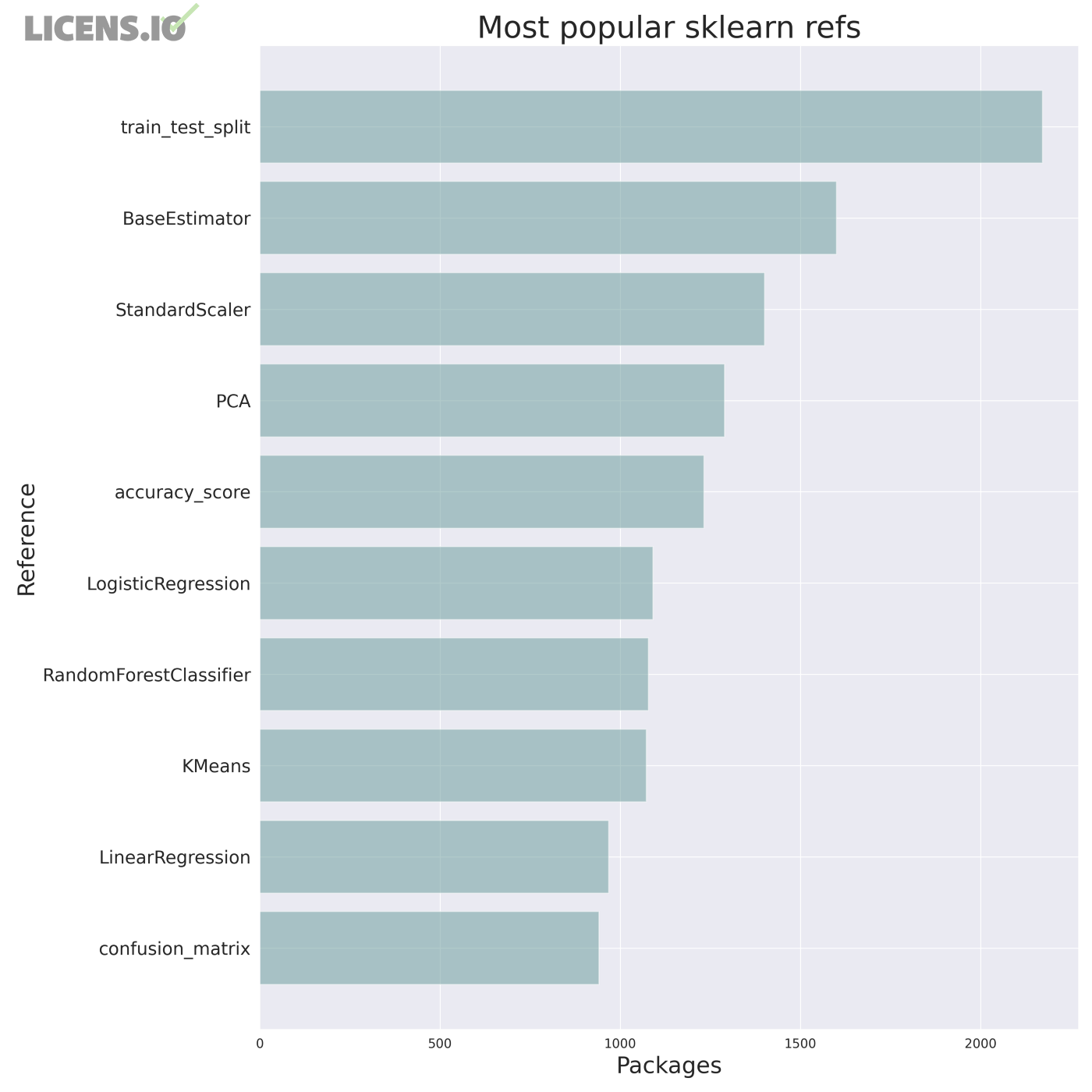

In fact, as the next figure demonstrates, the most popular references to sklearn often don’t use any of these methods.

Most popular sklearn references by number of unique packages using

The #1 most common use for sklearn is…train_test_split for splitting data. Not even cross-validation splits for complex folds. Just simple splits of aligned features and targets.

Next up in #2 is BaseEstimator . This class, as its name implies, implements the scikit-learn-compatible “protocol” for any machine learning estimator. Whenever you see a package that claims to provide a sklearn-compatible interface, this is how they do it. For example, gensim , dask , bugbug , giotto , divik , gordo, and hdbscan all use BaseEstimator to provide natural fit and predict behavior. Even “next-generation” deep learning packages like keras have implemented sklearn-compatible APIs.

After this, #3 StandardScaler and #4 PCA both implement simple preprocessing steps. To be honest, I’m not sure why someone would use scikit-learn instead of plain-old numpy or scipy for these methods unless they fit into a Pipeline, but the data speaks.

Excluding the other supervised and unsupervised methods we’ve seen before, we also see accuracy_score and confusion_matrix — two assessment methods.

In summary, we find that scikit-learn’s extensibility and utility has created an ecosystem in and of itself. Many packages that do not use any supervised or unsupervised sklearn methods either utilize its “helper” methods or create their own compatible supervised or unsupervised methods.

For the many maintainers, contributors, and funders of scikit-learn, these data hopefully put a smile on their face. The positive impact of its accessible implementations and enormous adoption of its API will be felt for many years to come.