Surveying Scikit-learn Usage — Part II:

The (Un)reasonable Popularity of Random Forests

Earlier this week, we started a series surveying usage of scikit-learn — a data-driven trip down memory lane, as I called it. And today, we’re going to continue that series by looking at the relative “popularity” of different sklearn models for supervised classification and regression tasks.

This post’s title is obviously a bit of a spoiler. But the story of the title is actually a useful place to start. Once upon a time, Caruana, Karampatziakis, and Yessenalina presented a paper at ICML. The year was 2008. The paper was titled An Empirical Evaluation of Supervised Learning in High Dimensions.

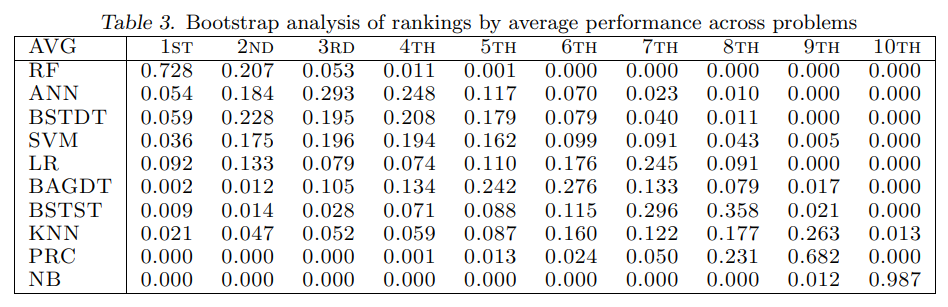

Table 3 from Caruana et al. 2008

The empirical message was pretty clear: when in doubt, start with random forests. Yes, there may be certain types of problems or datasets where other models outperformed, but in general, like buying IBM, you wouldn’t get fired for “buying Breiman.”

Seven years later, Ahmed El Deeb wrote an article on Medium titled The Unreasonable Effectiveness of Random Forests. And, possibly more than the actual Caruana research, this Medium post created a lot of awareness. Hacker News, Reddit, and Kaggle all amplified the message.

To be honest, I still use the same approach today. Yes, we have much “better” approaches for some problems now — but when you take into account additional costs and complexities like GPU or model architecture choices, random forests still deliver great cost-adjusted results.

More important, in my opinion, is that when random forests truly fail, you know you should think about why. Is your problem really predictable — or are you just trying to predict noise? Is the information available to you actually “useful?” And even if you really believe you have the right problem and right data, if random forests perform poorly, then the cost of training a “good” DNN might be higher than usual.

Yes, DeepMind and OpenAI and NVidia have been changing that calculus. Yes, I believe that conventional ML will be truly antiquated at some point, for some problems, sooner than we all think. But I don’t think that day is today.

Ironically, I use Github’s Copilot every day — but often to write traditional ML models!

Back to the world of “conventional” ML in scikit-learn. As we said, Caruana’s paper and El Deeb’s post generated a lot of attention around random forests. But was this attention carried through into action? And do people really still use random forests for everything today?

We obviously can’t answer that question exactly. But using our open source supply chain data at licens.io, we can at least come close. Because we perform deep inspection of all Python source files in repositories like PyPI, we really do know which specific classes or packages are being used — regardless of metadata or requirements.txt files.

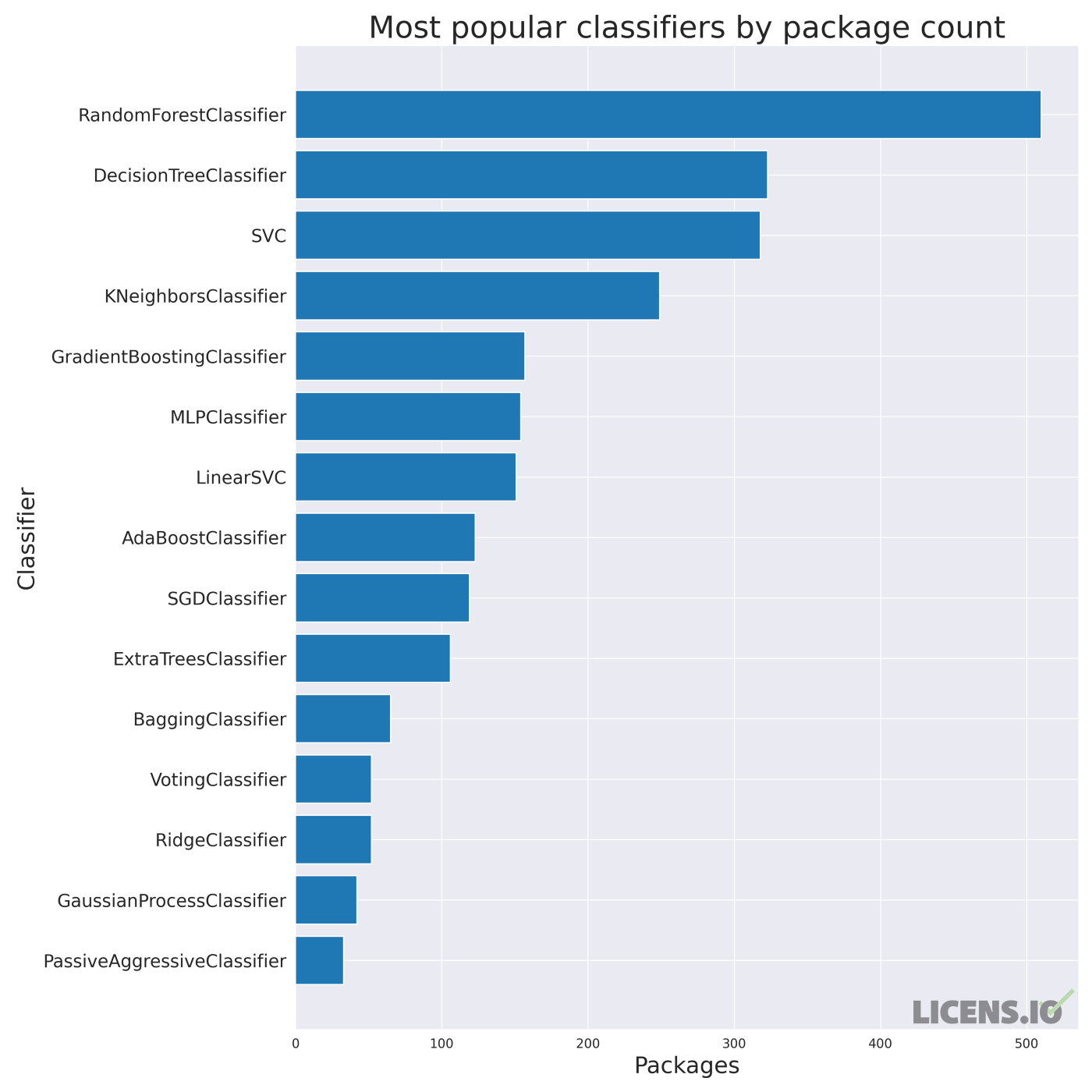

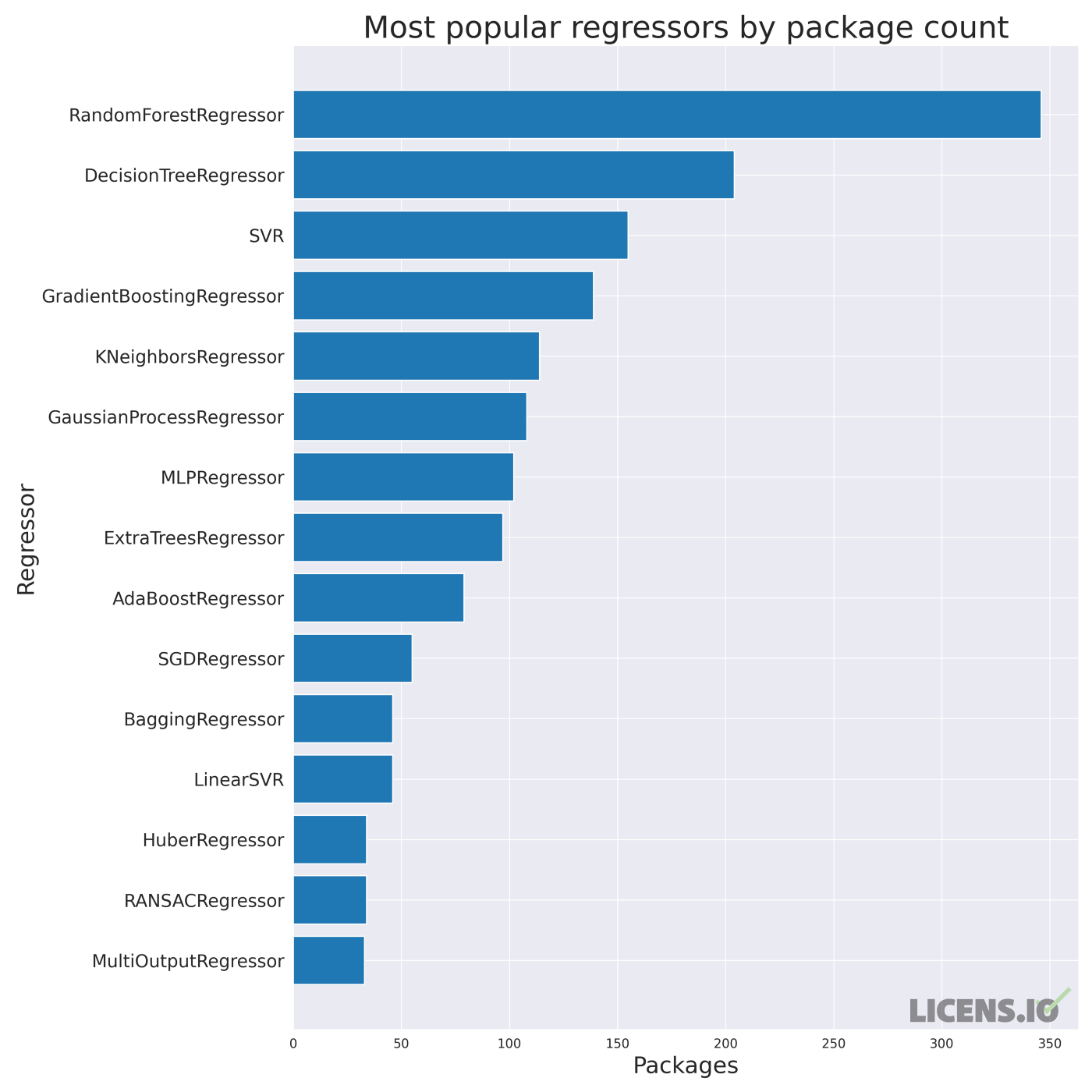

For this analysis, we looked at the number of unique packages that reference each sklearn estimator. I’ve broken up the data into two groups — one for classification estimators and one for regression estimators. And instead of looking at all history, I’ve restricted the analysis to the last year of releases — partly because sklearn’s interfaces have changed, but also because we’re trying to answer the question about usage today.

The figures below — as the title implies — tell a clear story. Random forests in their traditional formulation, still hold the top spot on both regression and classification tasks. If you count the other tree-based ensembles as random forests, the trend is even more clear. Interestingly, people are still using sklearn heavily as an interface to LibLinear and LibSVM — just like I was when I first found sklearn.

Most popular scikit-learn classification estimators

Most popular scikit-learn regression estimators

Who knows how long this trend will last? But, in our next post in the series, we’ll try to actually measure — and extrapolate — from recent trends. We’ll combine the time series data with package classifications to build a machine learning model — with a random forest regressor! — to predict what next year’s scikit-learn use will look like. See you next time!

PS: We’re staying focused on sklearn proper in this series, but we do have a separate post planned to cover other libraries like xgboost (as well as keras, TF, etc.)